Update: Thanks again to all those whom responded to the survey.

48 respondents, doubling my first attempt of 21, and a good representative split between security vendors and enterprise professionals. The results are below. I may make this survey a monthly activity provided the responses is good and people find the data helpful.

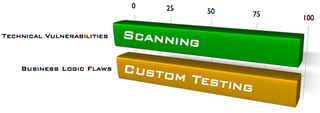

My Observations- I was a bit amazed by the significant portion of web application VA’s combining the black box and white box testing methods. I knew black box would be the most common approach, but I would have figured the pure source code reviews and the combo approach would have been statistically swapped. I may need to dig in more here and ask what the benefits people are seeing as a result.

- 73% of those performing web application vulnerability assessments are not using or rarely using commercial scanner products. It’s hard to say if this is good/bad/increasing/decreasing or otherwise. Certainly people want tools. People love their open source tools as a vast majority are using them. Be mindful that open source webappsec tools are mostly productivity tools, not scanners like we asked about in #3, so they’re not opting for one over the other. There is a lot of room to dig in here with future question as to why people use or don’t use certain types of products.

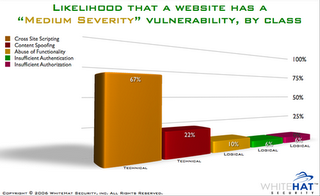

- People see XSS as slightly more dangerous and widespread over SQL Injection. But what’s clear is they find both issues important and weigh them heavily over the rest. Also surprising was prior to the survey, I would have though few assessors would be checking for CSRF issues. The fact is most of them are testing for CSRF at least some portion of the time. And imagine this issue is not on any vulnerability list. This will change soon.

- 3/4 of assessors agree more than 50% of websites have serious vulnerabilities. They also believe it would take them less than a day to find a serious issue in most of them. And 65% of assessors alredy knew of a previously undisclosed incident (web hack) that led to fraud, identify theft, extortion, theft of intellectual property, etc. That’s a sobering trifecta for the state of web application security.

- When asked what activity most improve security - modern software development frameworks, secure software and/or awareness training, and a stronger security presence in the SDLC were evenly split across the range. With the exception of industry regulation which assessors felt was not a driver of security. Interesting.

DescriptionSeveral weeks ago I sent out an

informal email survey to several dozen people who work in web application security professional services, an informal email survey consisting of a handful of multiple choice questions designed to help us understand more about the industry. The results were interesting enough to try again, this time with a few more questions and distributed to a wider audience.

If you perform web application vulnerability assessments, whether personally or professionally, then this survey is for you. I know most of us dislike taking surveys, but the more people who respond the more informative and representative the data will be.

The survey should take about 15 minutes to complete. All responses most be sent in by Nov 14.

Guidelines- Should be filled out by those who perform web application vulnerability assessments

- Copy-paste the questions below into an email and send your answers to me (jeremiah __at__ whitehatsec.com)

- To help curb fake submissions, please use your real name, preferably from your employers domain.

- Submission must be received by Nov 14.

Notice: Results based on data collected will be published.(Absolutely no names and contact information will be released to anyone)Questions1) Who do you perform web application vulnerability assessments for?

a) Security vendor

b) Enterprise

c) Entertainment and/or educational purposes

d) Other (please specify)

A: 40% B: 48% C: 8% D: 4% 2) What is your most common web application vulnerability assessment methodology?

a) Source code review

b) Black Box

c) Combination of A and B

A: 2% B: 54% C: 44%3) Do you use commercial vulnerability scanner products during your assessments?

(Acunetix, Cenzic, Fortify, NTOBJECTives, Ounce Labs, Secure Software, SPI Dynamic, Watchfire, etc.)

a) Never

b) Sometimes

c) 50/50

d) Most of the time

e) Always

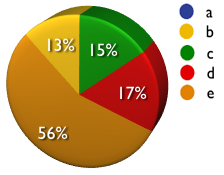

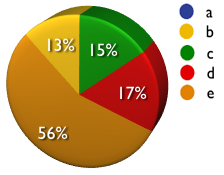

A: 52% B: 21% C: 0% D: 6% E: 21%4) Do you use open source tools during your assessments?

(Paros, Burp, Live HTTP headers, Web Scarab, CAL9000, Nikto, Wikto, etc.)

a) Never

b) Sometimes

c) 50/50

d) Most of the time

e) Always

A: 0% B: 13% C: 15% D: 17% E: 56%5) What is your preferred severity rating system for web application vulnerabilities?

a) DREAD

b) TRIKE

c) CVSS

d) Proprietary

e) Other

A: 8% B: 2% C: 6% D: 67% E: 17%6) What is the single most dangerous and widespread web application vulnerability?

a) Cross-Site Scripting (XSS)

b) Cross-Site Request Forgery (CSRF)

c) PHP Include

d) SQL Injection

e) Other (please specify)

A: 48% B: 6% C: 10% D: 31% E: 4%7) Are Cross-Site Request Forgeries (CSRF) part of your vulnerability assessment methodology?

a) Yes

b) No

c) Sometimes

d) Huh?

A: 42% B: 10% C: 46% D: 2%8) From your vulnerability assessment experience, how many websites have serious web application vulnerabilities?

(reveal private information, escalate privileges, or allow remote compromise)

a) All or nearly all

b) Most

c) 50/50

d) Some

e) No idea

A: 17% B: 38% C: 21% D: 25% E: 0%9) How long would it take you find a single serious web application vulnerability in MOST public websites?

a) Few minutes

b) Hour or two

c) Day and a night

d) A few days

e) Don't know, never tried

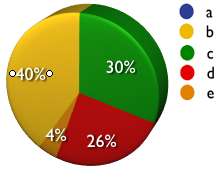

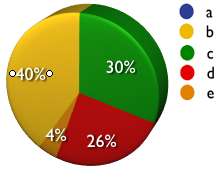

A: 23% B: 35% C: 19% D: 2% E: 21%10) How long after a web application vulnerability assessment are most of the severe issues resolved?

a) Within hours

b) The next couple days

c) During the next scheduled software update

d) Months from discovery

e) Just before the next annual assessment

A: 0% B: 40% C: 30% D: 26% E: 4%11) What organizational activity MOST improved the security of their websites?

a) Using modern software development frameworks (.NET, J2EE, Ruby on Rails, etc)

b) Secure software and/or awareness training

c) A stronger security presence in the SDLC

d) Compliance to industry regulations

e) Other (please specify)

A: 21% B: 28% C: 21% D: 2% E: 28%12) Are you privy to any undisclosed (not made public) malicious attacks made against a web application?

(fraud, identify theft, extortion, theft of intellectual property, etc.)

a) No

b) One

c) A few

d) Too many to count

A: 35% B: 7% C: 41% D: 17%

In similar fashion to my buffer overflows article, I set my sights on Myth-Busting AJAX (In)-Security.

In similar fashion to my buffer overflows article, I set my sights on Myth-Busting AJAX (In)-Security.