"Once flaws have been identified, what is my motivation to fix them? If you can't give me the likelihood of attack, and what I stand to lose by it being exploited, how many dollars should I invest to repairing it?"

As security practitioners, we continue to say how much the development environments need to learn to make secure software. I'd say there's another side to that coin - security practitioners need to be able to measure the impact of particular threats in terms of dollars so that we don't just reveal vulnerabilities and the threats that might exploit them, but what the business stands to lose of the vulnerability isn't fixed.

Very well stated and got me thinking about how this could be done. For some reason the movie Fight Club popped into my head with the scene about how Jack, as a automative manufacture recall coordinator, applied "the formula". Seemed like a fun way to go about it. :)

JACK (V.O.)

I'm a recall coordinator. My job is to apply the formula.

....

JACK (V.O.)

Take the number of vehicles in the field, (A), and multiply it by the probable rate of failure, (B), then multiply the result by the average out-of-court settlement, (C). A times B times C equals X...

JACK

If X is less than the cost of a recall, we don't do one.

BUSISNESS WOMAN

Are there a lot of these kinds of accidents?

JACK

Oh, you wouldn't believe.

BUSINESS WOMAN

... Which... car company do you work for?

JACK

A major one.

I know I know, I broke the first rule of Fight Club. Anyway, I have no idea how "real" this formula is or if its applied, but it seemed to make sense. I wondered if something similar could be applied to web application security. And if nothing else, an entertaining exercise.

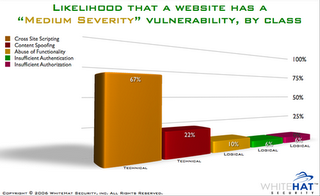

Take the number of known vulnerabilities in a website, (A), and multiply it by the probability of malicious exploitation, (B), then multiply the result by the average average financial cost of handling a security incident, (C). A times B times C equals X...

If X is less than the cost fixing the vulnerabilities, we won't.

Sounds like it could work given you could be somewhat accurate in filling in the variables, which is the hard part. The thing is this process probably isn't a suitable task for an information security person. Maybe we need to seek the assistance of an economist of a probability theorist and see what they have to say.